A robot uprising is no longer a thing of science fiction.

No, seriously. The rise of Cylons, Centurions, droids - pick your artificial intelligence - is now such a concern that an entire research division is devoted to monitoring it.

The Future of Humanity Institute at the University of Oxford in Great Britain (FHI) has produced more than 200 peer-reviewed publications with over 5,500 citations. All expound upon the threats associated with advancements in AI.

Artificial intelligence - also known as "machine learning" - refers to the development of computer systems capable of performing tasks that normally require aspects of human intelligence (such as visual perception, speech recognition, decision-making, and translation between languages). Apple Inc.'s (Nasdaq: AAPL) Siri and self-driving cars like Alphabet Inc.'s (Nasdaq: GOOG, GOOGL) - formerly Google Inc. - are current examples.

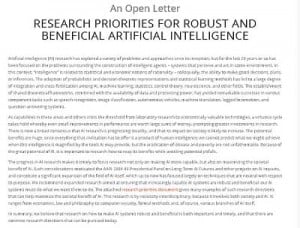

In 2015, AI reached peak buzz status after concerned scientists, researchers, and academics issued a collective warning on July 28. Those involved include Tesla Motors Inc. (Nasdaq: TSLA) founder Elon Musk, Apple co-founder Steve Wozniak, and top scientist Stephen Hawking.

In an open letter presented at the International Joint Conferences on Artificial Intelligence in Buenos Aires, they warned a military artificial intelligence arms race could soon develop if preventative measures are not taken as soon as possible.

In fact, they believe a global arms race is "virtually inevitable" if major military powers push ahead with AI weapons development. Military AI is indeed being developed. For example, the tech is already used to predict the military strategies of Islamic extremists.

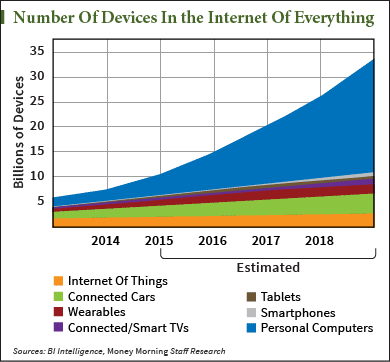

Such innovations would not be possible without two other hot-button developments in tech: Big Data and the Internet of Things (IoT).

The increasing interconnectedness of these three is another reason threats posed by unregulated AI have become a reality...

You see, with the rapid expansion of the IoT comes a massive volume of big data. AI is used to process, store, and make sense of that information.

But the idea of providing a machine capable of learning with unfathomable amounts of data stands to turn a scary science fiction plot into reality...

Threats of Unregulated Artificial Intelligence

There are both short-term and long-term concerns regarding the advancement of AI.

In the short term:

- Who or what will control AI development?

- How many people could lose jobs because of AI development?

Right now, it's "every innovator for themselves" when it comes to AI advancement. And there are no ethical guidelines available for the process.

Here's one current example...

As of right now, existing tactical AI and robotics components used by the military can already perform Big Data tasks. They can perceive targets, map, navigate, make strategic decisions, and plan long-term, multi-step attacks. Once further developed and combined, these AIs will be autonomous weapons systems capable of selecting and engaging targets without human intervention.

International humanitarian law has no specific provisions for such autonomy.

According to a May 27, 2015, article on Nature.com, "The 1949 Geneva Convention on humane conduct in war requires any attack to satisfy three criteria: military necessity; discrimination between combatants and non-combatants; and proportionality between the value of the military objective and the potential for collateral damage. (Also relevant is the Martens Clause, added in 1977, which bans weapons that violate the 'principles of humanity and the dictates of public conscience.' These are subjective judgments that are difficult or impossible for current AI systems to satisfy."

And then there is the problem of putting people out of work...

Take the relatively new concept of autonomous cars, for example. Google has driven its fleet of experimental robot cars more than 1.1 million kilometers without serious incident. In May 2014, the company premiered a new low-speed electric prototype to fine-tune city driving - with no steering wheel or brakes whatsoever.

Almost two years ago (on Feb. 13, 2013), PricewaterhouseCoopers predicted that the number of vehicles on the road would be reduced by 99% when this movement is finally realized, estimating that the fleet of American automobiles will fall from 245 million to just 2.4 million vehicles.

The U.S. Bureau of Labor Statistics lists that, as of November 2015, just under 1 million people are employed in the motor vehicle parts and manufacturing industry. An additional 2 million individuals are employed in the dealer and maintenance network. And truck, bus, delivery, and taxi drivers account for nearly 6 million professional driving jobs. Virtually all of these 10 million jobs would be eliminated within 10 to 15 years with the advancement of autonomous cars.

And then there's the problem with artificial intelligence in the long term...

Neil Jacobstein, co-chair of artificial intelligence and robotics at Singularity University, wrote in a Nov. 13 article for Pacific Standard about the need for researchers to address AI control issues by building in pathways within the machines to re-establish control should they exhibit undesired behaviors. His concern about superintelligence is, in a nutshell, maintaining human control over their actions.

On Dec. 27, The Washington Post further expanded on the problem of superintelligence self-control by outlining philosopher Nick Bostrom's artificial intelligence "paperclip robot paradox."

It goes like this...

You build an intelligent machine programmed to make paper clips. This machine keeps getting smarter and more powerful, but never develops human values. Instead, it achieves "superintelligence." It begins to convert all kinds of ordinary materials into paper clips. Eventually it decides to turn everything on Earth into paperclips.

This, Bostrom surmises, could be the future with unmonitored artificial intelligence advancement. Bostrom, by the way, heads the University of Oxford's Future of Humanity Institute. His theory, writes The Washington Post, "reflects a broader truth: We live in an age in which machine intelligence has become a part of daily life. Computers fly planes and soon will drive cars. Computer algorithms anticipate our needs and decide which advertisements to show us. Machines create news stories without human intervention. Machines can recognize your face in a crowd."

Simply put, we are approaching and watching idly as new technologies combine - Big Data, the Internet of Things, and artificial intelligence. And we're not entirely certain if we're in control of our inventions or not.

[mmpazkzone name="in-story" network="9794" site="307044" id="137008" type="4"]

Are you worried about the unregulated advancement of artificial intelligence? Tell us on Twitter @moneymorning, or like us on Facebook.

Capital Controls Are Here: If you think you can always get cash out of your bank account when you want it, you're wrong. Banks can restrict access to deposits - or confiscate them - and yet the general public has no idea these "laws" exist. Here's how this nightmare could impact you...